作者:MeshCloud脉时云公有云架构师 于文宝

部署和使用使用 BigQuery 创建数据仓库快速启动解决方案。本解决方案演示如何使用 BigQuery 作为数据仓库,使用 Looker Studio 作为信息中心和可视化工具在 Google Cloud 中构建数据仓库。

构建数据仓库的常见使用情形包括:

- 汇总和创建营销分析仓库,以提高收入或其他客户指标。

- 构建财务报告和分析。

- 构建运营信息中心以提高公司绩效。

目标

- 了解数据如何流入云数据仓库,以及如何使用 SQL 转换数据。

- 根据数据构建信息中心以执行数据分析。

- 安排 SQL 语句以定期更新数据。

- 创建机器学习模型以预测一段时间内的数据值。

使用的产品

此解决方案使用以下 Google Cloud 产品:

- BigQuery:伸缩能力极强的全代管式数据仓库,内置有机器学习功能。

- Cloud Storage:一种企业级服务,为各种数据类型提供费用低廉、不受限制的对象存储。可从 Google Cloud 内部和外部访问数据,并以地理位置冗余方式复制数据。

- Looker Studio:自助式商业智能平台,可帮助您创建和共享数据洞见。

以下 Google Cloud 产品用于暂存解决方案中首次使用的数据:

- Cloud Functions:一种轻量级无服务器计算服务,可让您创建单一用途的独立函数来响应 Google Cloud 事件,而无需管理服务器或运行时环境。

- Pub/Sub:一种异步且可伸缩的通讯服务,可将生成消息的服务与处理这些消息的服务分离开。

- Eventarc:该服务可管理分离微服务之间的状态更改(事件)流,同时管理传送、安全、授权、可观测性和错误处理。

- Workflows:一个全代管式编排平台,该平台按指定顺序以工作流的形式执行服务。Workflows 可以组合各种服务,包括 Cloud Run 或 Cloud Functions 上托管的自定义服务、Google Cloud 服务(如 BigQuery)以及任何基于 HTTP 的 API。

架构图

测试demo

此解决方案部署的示例仓库分析纽约市出租车数据,以了解出租车公司在一段时间内的业绩。下图展示了该解决方案部署的 Google Cloud 资源的架构。

解决方案流程

该架构表示用于填充和转换数据仓库数据的常见数据流动:

- 数据发送到 Cloud Storage 存储桶。

- Cloud Functions 辅助数据移动。

- 使用 SQL 存储过程将数据从外部表加载到 BigQuery 中。

- 使用 SQL 存储过程在 BigQuery 中转换数据。

- 根据数据创建信息中心,以使用 Looker Studio 执行更多分析。

获取所需的 IAM 权限

如需开始部署过程,您需要下表中列出的 Identity and Access Management (IAM) 权限。如果您拥有计划在其中部署解决方案的项目的 roles/owner 基本角色,则您已经拥有所有必要的权限。如果您没有 roles/owner 角色,请让管理员授予您这些权限(或包含这些权限的角色)。

| 所需的 IAM 权限 | 包含所需权限的预定义角色 |

| serviceusage.services.enable | Service Usage Admin (roles/serviceusage.serviceUsageAdmin) |

| iam.serviceAccounts.create | Service Account Admin (roles/iam.serviceAccountAdmin) |

| resourcemanager.projects.setIamPolicy | Project IAM Admin (roles/resourcemanager.projectIamAdmin) |

| config.deployments.create config.deployments.list | Cloud Config Manager Admin (roles/config.admin) |

使用 Terraform:如果要自定义解决方案,或者要使用基础架构即代码 (IaC) 方法自动预配和管理资源,请使用此方法。从 GitHub 下载 Terraform 配置,根据需要可以选择自定义代码,然后使用 Terraform 部署解决方案。部署解决方案后,您可以继续使用 Terraform 来管理该解决方案。

测试代码demo

https://github.com/terraform-google-modules/terraform-google-bigquery.git

部署步骤;

设置 Terraform 客户端 (参考官网)

查看客户端版本

terraform -v

Terraform v1.3.6

on linux_amd64克隆代码

git clone https://github.com/terraform-google-modules/terraform-google-bigquery.git

ls terraform-google-bigquery 查看代码配置说明

cd /tmp/terraform-google-bigquery/modules/data_warehouse

README.md assets main.tf outputs.tf variables.tf versions.tf- 在同一目录中,创建一个名为 terraform.tfvars 的文本文件。

- 在 terraform.tfvars 文件中,复制以下代码段,并为所需的变量设置值。

- 按照代码段中作为注释提供的说明进行操作。

- 此代码段仅包含您必须为其设置值的变量。Terraform 配置包括具有默认值的其他变量。如需查看所有变量和默认值,请参阅 $HOME/tmp/terraform-google-bigquery/modules/data_warehouse 目录中提供的 variables.tf 文件。

- 确保您在 terraform.tfvars 文件中设置的每个值都与 variables.tf 文件中声明的变量类型匹配。例如,如果为 variables.tf 文件中的变量定义的类型为 bool,则必须将 true 或 false 指定为该变量在 terraform.tfvars 文件中的值。

# This is an example of the terraform.tfvars file.

# The values in this file must match the variable types declared in variables.tf.

# The values in this file override any defaults in variables.tf.

# ID of the project in which you want to deploy the solution

project_id = "PROJECT_ID"

# Google Cloud region where you want to deploy the solution

# Example: us-central1

region = "REGION"

# Whether or not to enable underlying apis in this solution.

# Example: true

enable_apis = true

# Whether or not to protect BigQuery resources from deletion when solution is modified or changed.

# Example: false

force_destroy = false

# Whether or not to protect Cloud Storage resources from deletion when solution is modified or changed.

# Example: true

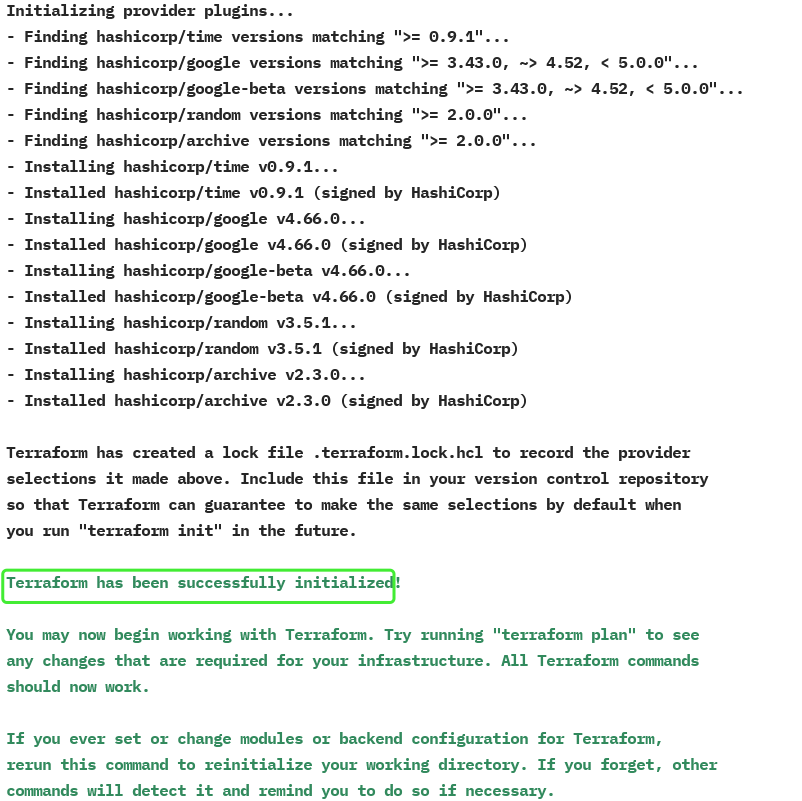

deletion_protection = true运行以下命令初始化 Terraform:

terraform init等待系统显示以下消息:

Terraform has been successfully initialized!

验证并查看 Terraform 配置

确保当前工作目录为 $

HOME/tmp/terraform-google-bigquery/modules/data_warehouse。 如果不是,请转到该目录

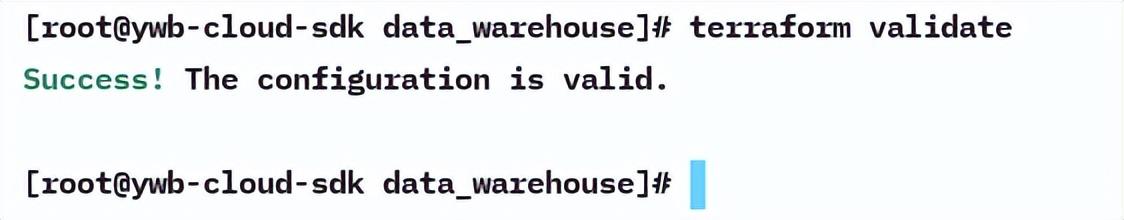

terraform validate如果该命令返回任何错误,请在配置中进行所需的更正,然后再次运行 terraform validate 命令。重复此步骤,直到命令返回以下消息:

Success! The configuration is valid.

查看配置中定义的资源:

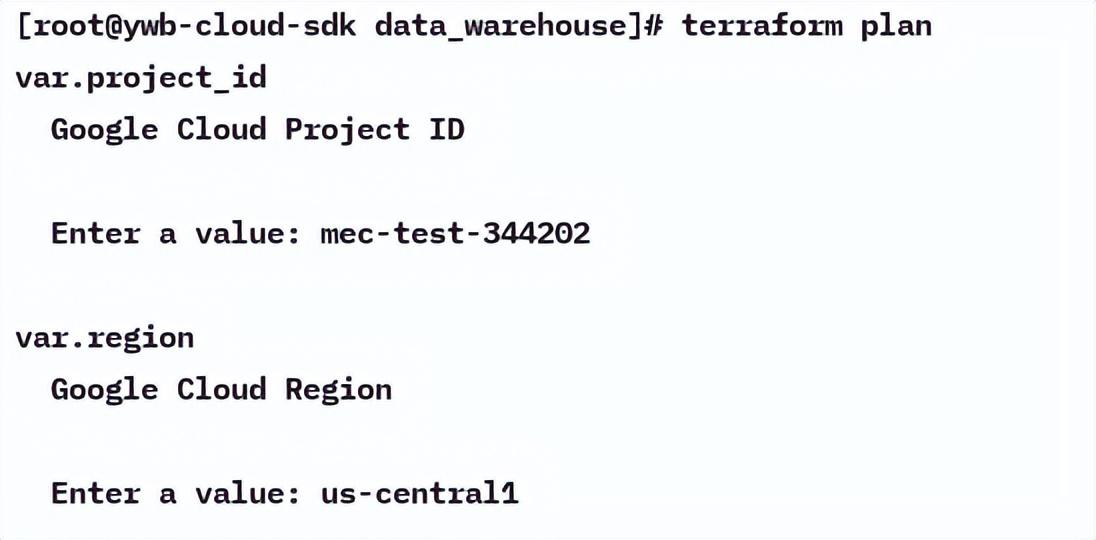

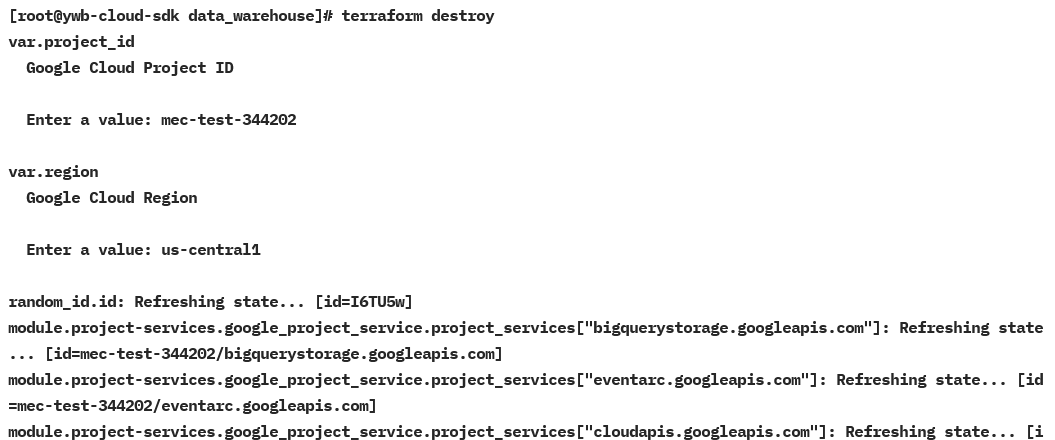

terraform plan

var.project_id

Google Cloud Project ID

Enter a value: mec-test-344202

var.region

Google Cloud Region

Enter a value: us-central1

预配资源

如果 Terraform 配置中不需要进一步更改,请部署资源。

- 确保当前工作目录为 $HOME/tmp/terraform-google-bigquery/modules/data_warehouse。 如果不是,请转到该目录。

- 应用 Terraform 配置:

terraform apply- 如果您未按照上文所述创建 terraform.tfvars 文件,则 Terraform 会提示您为没有默认值的变量输入值。输入必需的值。

- Terraform 会显示将创建的资源列表。

- 当系统提示您执行操作时,请输入 yes。

- Terraform 展示一些显示部署进度的消息。

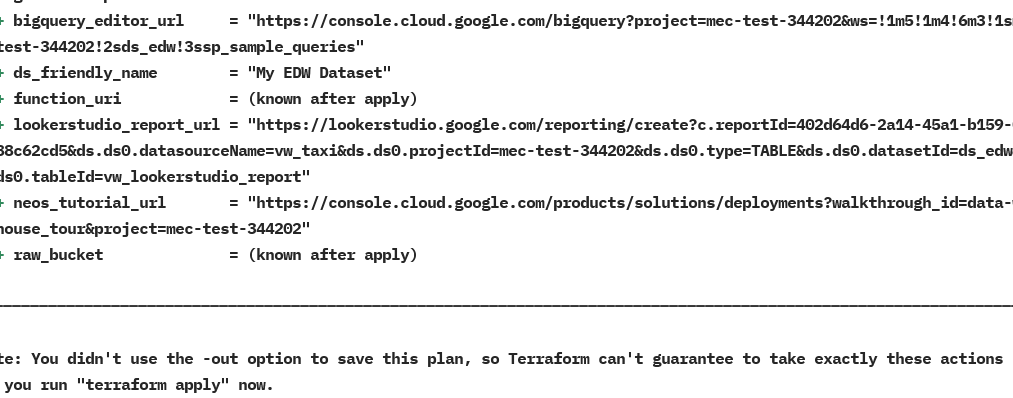

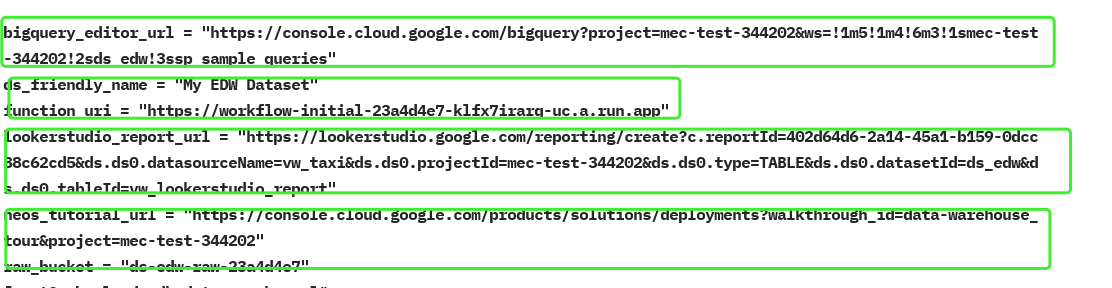

Terraform 输出还列出了您需要的以下其他信息:

- 已部署的信息中心的 Looker Studio 网址。

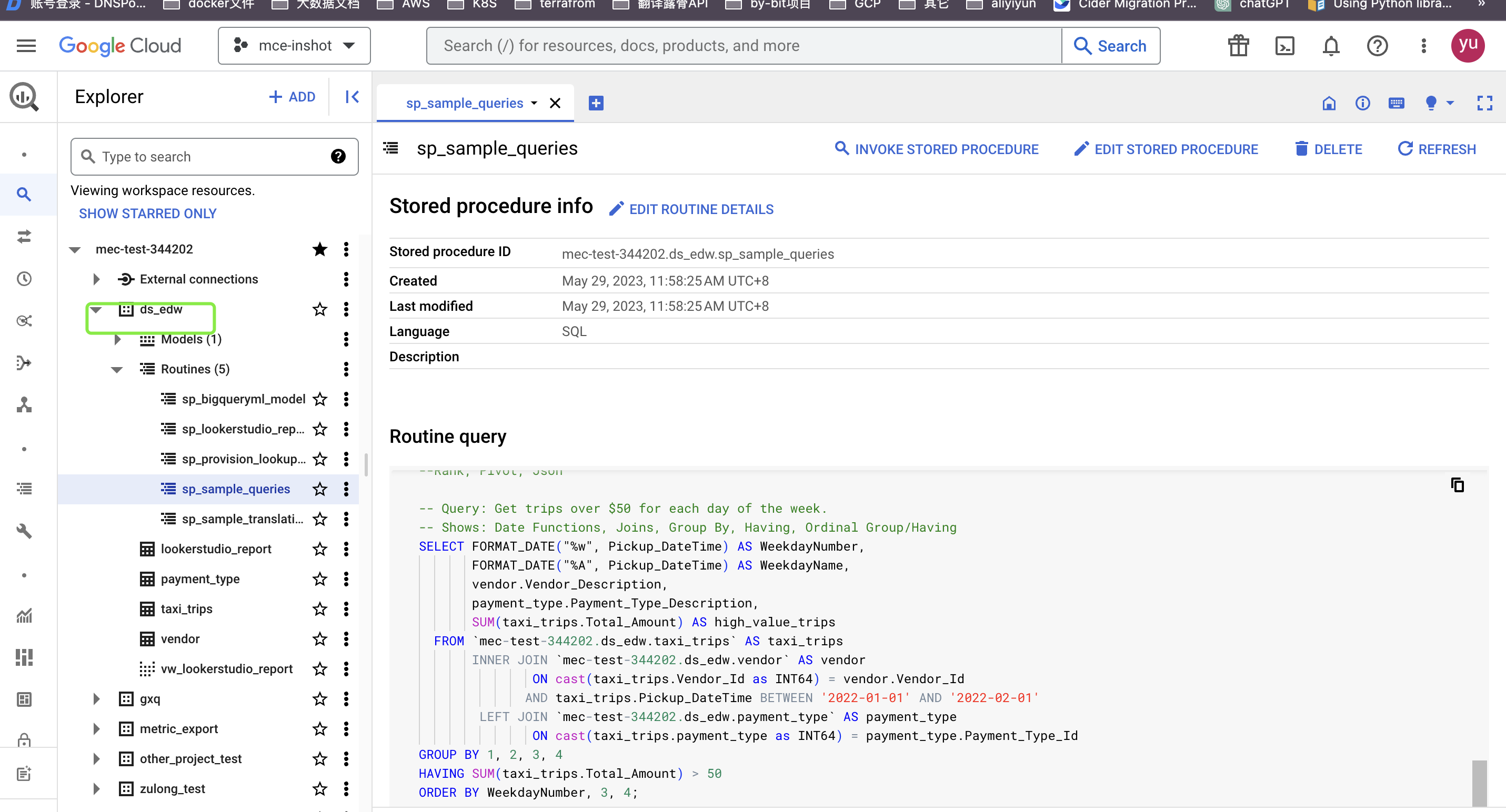

- 用于打开 BigQuery 编辑器以进行部分示例查询的链接。

以下示例展示了输出内容

bigquery_editor_url = "https://console.cloud.google.com/bigquery?project=mec-test-344202&ws=!1m5!1m4!6m3!1smec-test-344202!2sds_edw!3ssp_sample_queries"

ds_friendly_name = "My EDW Dataset"

function_uri = "https://workflow-initial-23a4d4e7-klfx7irarq-uc.a.run.app"

lookerstudio_report_url = "https://lookerstudio.google.com/reporting/create?c.reportId=402d64d6-2a14-45a1-b159-0dcc88c62cd5&ds.ds0.datasourceName=vw_taxi&ds.ds0.projectId=mec-test-344202&ds.ds0.type=TABLE&ds.ds0.datasetId=ds_edw&ds.ds0.tableId=vw_lookerstudio_report"

neos_tutorial_url = "https://console.cloud.google.com/products/solutions/deployments?walkthrough_id=data-warehouse_tour&project=mec-test-344202"

raw_bucket = "ds-edw-raw-23a4d4e7"验证创建的服务

- 数据发送到 Cloud Storage 存储桶。

- Cloud Functions 辅助数据移动。

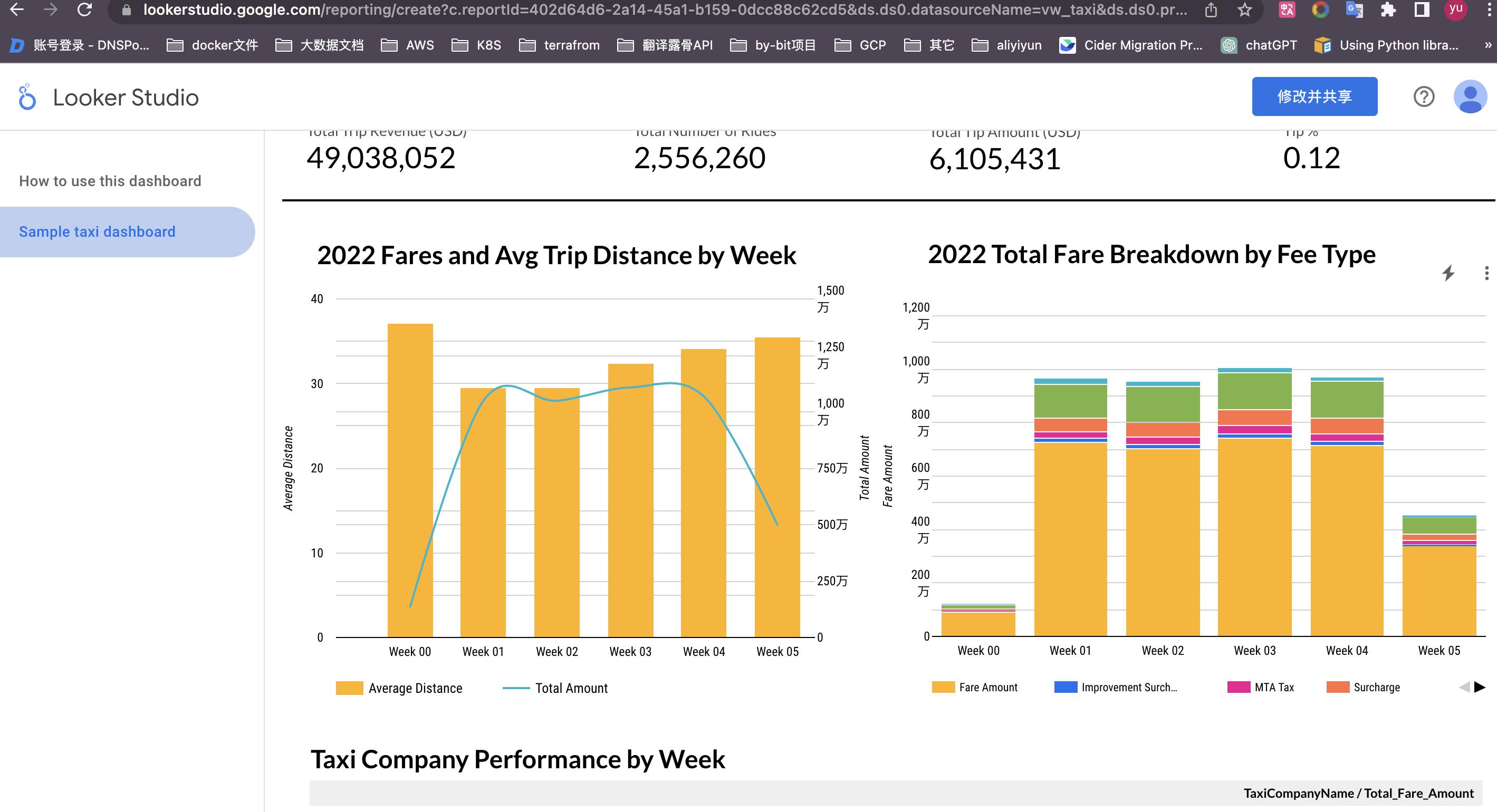

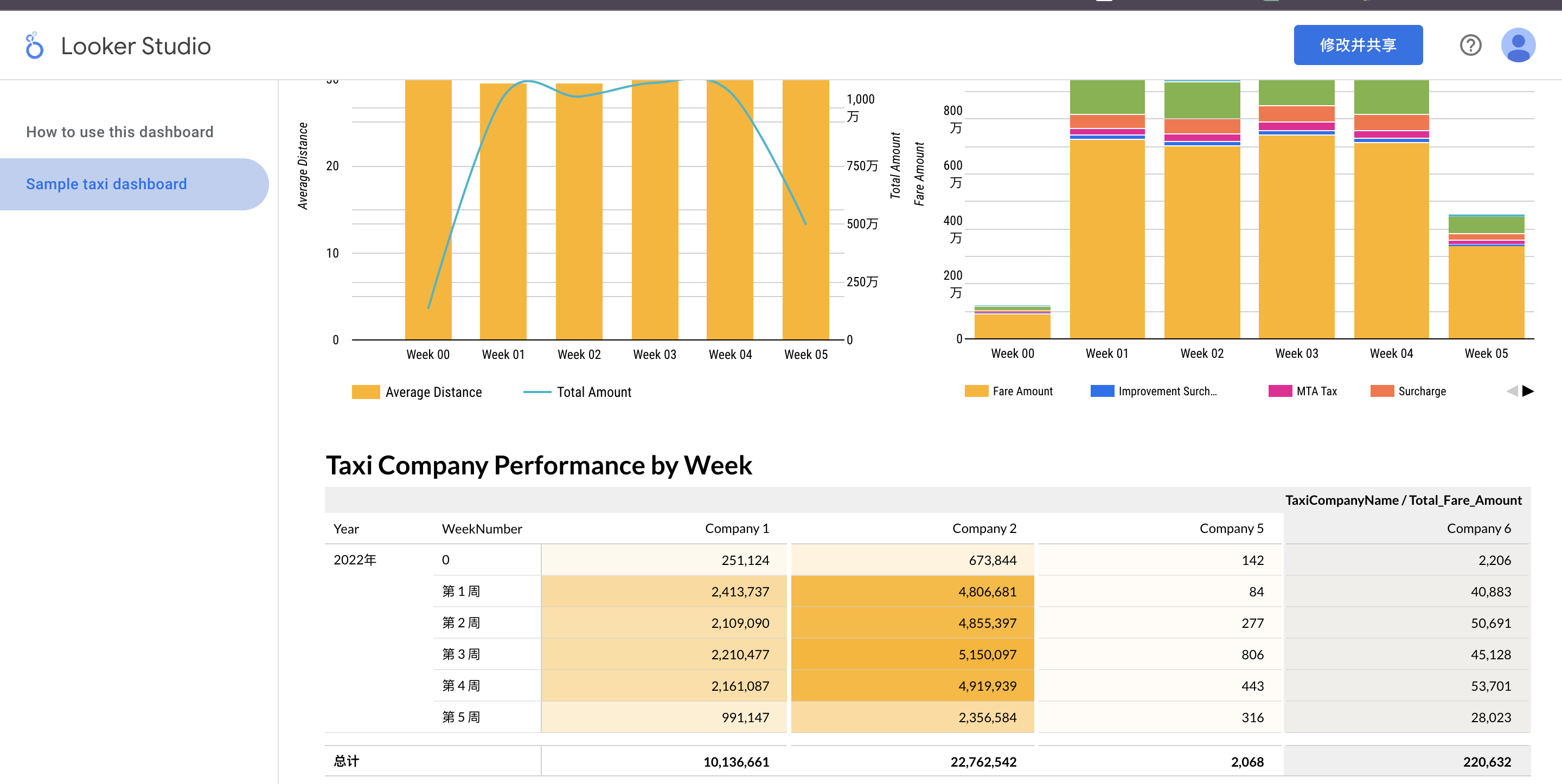

3.查看lookerstudio可视化

Bigquery 数据分析仓库

-- Copyright 2023 Google LLC

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

/*

Use Cases:

- BigQuery supports full SQL syntax and many analytic functions that make complex queries of lots of data easy

Description:

- Show joins, date functions, rank, partition, pivot

Reference:

- Rank/Partition: https://cloud.google.com/bigquery/docs/reference/standard-sql/analytic-function-concepts

- Pivot: https://cloud.google.com/bigquery/docs/reference/standard-sql/query-syntax#pivot_operator

Clean up / Reset script:

n/a

*/

--Rank, Pivot, Json

-- Query: Get trips over $50 for each day of the week.

-- Shows: Date Functions, Joins, Group By, Having, Ordinal Group/Having

SELECT FORMAT_DATE("%w", Pickup_DateTime) AS WeekdayNumber,

FORMAT_DATE("%A", Pickup_DateTime) AS WeekdayName,

vendor.Vendor_Description,

payment_type.Payment_Type_Description,

SUM(taxi_trips.Total_Amount) AS high_value_trips

FROM `mec-test-344202.ds_edw.taxi_trips` AS taxi_trips

INNER JOIN `mec-test-344202.ds_edw.vendor` AS vendor

ON cast(taxi_trips.Vendor_Id as INT64) = vendor.Vendor_Id

AND taxi_trips.Pickup_DateTime BETWEEN '2022-01-01' AND '2022-02-01'

LEFT JOIN `mec-test-344202.ds_edw.payment_type` AS payment_type

ON cast(taxi_trips.payment_type as INT64) = payment_type.Payment_Type_Id

GROUP BY 1, 2, 3, 4

HAVING SUM(taxi_trips.Total_Amount) > 50

ORDER BY WeekdayNumber, 3, 4;

-- Query: amounts (Cash/Credit) by passenger type

WITH TaxiDataRanking AS

(

SELECT CAST(Pickup_DateTime AS DATE) AS Pickup_Date,

cast(taxi_trips.payment_type as INT64) as Payment_Type_Id,

taxi_trips.Passenger_Count,

taxi_trips.Total_Amount,

RANK() OVER (PARTITION BY CAST(Pickup_DateTime AS DATE),

taxi_trips.payment_type

ORDER BY taxi_trips.Passenger_Count DESC,

taxi_trips.Total_Amount DESC) AS Ranking

FROM `mec-test-344202.ds_edw.taxi_trips` AS taxi_trips

WHERE taxi_trips.Pickup_DateTime BETWEEN '2022-01-01' AND '2022-02-01'

AND cast(taxi_trips.payment_type as INT64) IN (1,2)

)

SELECT Pickup_Date,

Payment_Type_Description,

Passenger_Count,

Total_Amount

FROM TaxiDataRanking

INNER JOIN `mec-test-344202.ds_edw.payment_type` AS payment_type

ON TaxiDataRanking.Payment_Type_Id = payment_type.Payment_Type_Id

WHERE Ranking = 1

ORDER BY Pickup_Date, Payment_Type_Description;

-- Query: data summed by payment type and passenger count, then pivoted based upon payment type

WITH MonthlyData AS

(

SELECT FORMAT_DATE("%B", taxi_trips.Pickup_DateTime) AS MonthName,

FORMAT_DATE("%m", taxi_trips.Pickup_DateTime) AS MonthNumber,

CASE WHEN cast(taxi_trips.payment_type as INT64) = 1 THEN 'Credit'

WHEN cast(taxi_trips.payment_type as INT64) = 2 THEN 'Cash'

WHEN cast(taxi_trips.payment_type as INT64) = 3 THEN 'NoCharge'

WHEN cast(taxi_trips.payment_type as INT64) = 4 THEN 'Dispute'

END AS PaymentDescription,

taxi_trips.Passenger_Count,

taxi_trips.Total_Amount

FROM `mec-test-344202.ds_edw.taxi_trips` AS taxi_trips

WHERE taxi_trips.Pickup_DateTime BETWEEN '2022-01-01' AND '2022-02-01'

AND Passenger_Count IS NOT NULL

AND cast(payment_type as INT64) IN (1,2,3,4)

)

SELECT MonthName,

Passenger_Count,

FORMAT("%'d", CAST(Credit AS INTEGER)) AS Credit,

FORMAT("%'d", CAST(Cash AS INTEGER)) AS Cash,

FORMAT("%'d", CAST(NoCharge AS INTEGER)) AS NoCharge,

FORMAT("%'d", CAST(Dispute AS INTEGER)) AS Dispute

FROM MonthlyData

PIVOT(SUM(Total_Amount) FOR PaymentDescription IN ('Credit', 'Cash', 'NoCharge', 'Dispute'))

ORDER BY MonthNumber, Passenger_Count;

-- Query: data pivoted by payment type

WITH MonthlyData AS

(

SELECT FORMAT_DATE("%B", taxi_trips.Pickup_DateTime) AS MonthName,

FORMAT_DATE("%m", taxi_trips.Pickup_DateTime) AS MonthNumber,

CASE WHEN cast(taxi_trips.payment_type as INT64) = 1 THEN 'Credit'

WHEN cast(taxi_trips.payment_type as INT64) = 2 THEN 'Cash'

WHEN cast(taxi_trips.payment_type as INT64) = 3 THEN 'NoCharge'

WHEN cast(taxi_trips.payment_type as INT64) = 4 THEN 'Dispute'

END AS PaymentDescription,

SUM(taxi_trips.Total_Amount) AS Total_Amount

FROM `mec-test-344202.ds_edw.taxi_trips` AS taxi_trips

WHERE taxi_trips.Pickup_DateTime BETWEEN '2022-01-01' AND '2022-02-01'

AND Passenger_Count IS NOT NULL

AND cast(taxi_trips.payment_type as INT64) IN (1,2,3,4)

GROUP BY 1, 2, 3

)

SELECT MonthName,

FORMAT("%'d", CAST(Credit AS INTEGER)) AS Credit,

FORMAT("%'d", CAST(Cash AS INTEGER)) AS Cash,

FORMAT("%'d", CAST(NoCharge AS INTEGER)) AS NoCharge,

FORMAT("%'d", CAST(Dispute AS INTEGER)) AS Dispute

FROM MonthlyData

PIVOT(SUM(Total_Amount) FOR PaymentDescription IN ('Credit', 'Cash', 'NoCharge', 'Dispute'))

ORDER BY MonthNumber;

-- Query: See what day of the week in each month has the greatest amount (that's the month/day to work)

WITH WeekdayData AS

(

SELECT FORMAT_DATE("%B", Pickup_DateTime) AS MonthName,

FORMAT_DATE("%m", Pickup_DateTime) AS MonthNumber,

FORMAT_DATE("%A", Pickup_DateTime) AS WeekdayName,

SUM(taxi_trips.Total_Amount) AS Total_Amount

FROM `mec-test-344202.ds_edw.taxi_trips` AS taxi_trips

WHERE taxi_trips.Pickup_DateTime BETWEEN '2022-01-01' AND '2022-02-01'

AND cast(taxi_trips.payment_type as INT64) IN (1,2,3,4)

GROUP BY 1, 2, 3

)

SELECT MonthName,

FORMAT("%'d", CAST(Sunday AS INTEGER)) AS Sunday,

FORMAT("%'d", CAST(Monday AS INTEGER)) AS Monday,

FORMAT("%'d", CAST(Tuesday AS INTEGER)) AS Tuesday,

FORMAT("%'d", CAST(Wednesday AS INTEGER)) AS Wednesday,

FORMAT("%'d", CAST(Thursday AS INTEGER)) AS Thursday,

FORMAT("%'d", CAST(Friday AS INTEGER)) AS Friday,

FORMAT("%'d", CAST(Saturday AS INTEGER)) AS Saturday,

FROM WeekdayData

PIVOT(SUM(Total_Amount) FOR WeekdayName IN ('Sunday','Monday','Tuesday','Wednesday','Thursday','Friday','Saturday'))

ORDER BY MonthNumber;数据仓库删除部署资源

terraform destroy

代码地址:

https://github.com/terraform-google-modules/terraform-google-bigquery/tree/645e382bfe830c3111f5fa6cbe7bae8f87e691f4

参考文档:

https://cloud.google.com/architecture/big-data-analytics/data-warehouse?hl=zh_CN&_ga=2.185801642.-2097802361.1683768839#delete-deployment